Talks/DagitThesis

- Chapter 1 Introduction

- Chapter 2 Related Work

- Chapter 3 Data Model and Invariants

- Chapter 4 Checked Invariants

- Chapter 5 Discussion

- Chapter 6 Conclusion

- Bibliography

- Appendix A Existentially Quantified Types

- Appendix B Generalized Algebraic Data Types (GADTs)

- Appendix C Directed Type Examples

Chapter 1 Introduction

Version control systems require a high degree of robustness as users trust them to safeguard their data over the life cycle of software projects. Corruption in repository data, such as the history of changes, can lead to wasted time and user frustration. Worse yet is the possibility of a bug which constructs invalid versions of the user’s data. When the data under version control is source code this can lead to build failures or forms of corruption that go unnoticed until it poses a problem.

Software engineers have many tools to help write software applications. Many of these tools exist to tackle the challenge of writing correct software. Testing continues to be a popular approach to this challenge but testing is not usually enough to prove correctness. Instead, software engineers use testing to gain confidence that the tested program will behave as intended in most uses.

When testing is not sufficient, formal methods may be used to prove parts of the program correct. Often formal methods are applied in only the core of applications due to the high labor costs needed to use them effectively. Another approach to reducing the high cost of formal methods is to use an automated method such as a proof assistant. Proof assistants are only available in specialized domains, such as research programming languages. This means that many mainstream programming environments lack automated proof tools.

Given the importance of correctness for version control systems, we would like to eliminate as many bugs as possible from the Open Source version control system Darcs [Rou09a]. We examine the data model used by Darcs and discuss a number of invariants that must be maintained to avoid data corruption.

Darcs is implemented in the programming language Haskell, which gives us an opportunity to apply modern innovations in Programming Language research to a real-world software application. Our approach shows that the type system of the Haskell programming language together with a novel combination of language extensions, implemented by the Glasgow Haskell Compiler (GHC), can be used as a light-weight alternative to a proof assistant. We show that entire classes of bugs can be eliminated at compile time using our approach.

Finally we examine the impact of our techniques on the Darcs codebase and the challenges that arise when applying these techniques to an existing real-world application.

1.1 Background

This document shows how to encode specific invariants into types in the Haskell programming language. Although program invariants may seem unrelated to types, we use properties of Haskell’s strong static type checking to gain static guarantees about these invariants.

1.1.1 Patch Theory

The data model used and pioneered by Darcs is known as Patch Theory [Rou09b], discovered by David Roundy to solve the problem of communicating changes in a distributed fashion between contributors. Patch Theory remains distinct in that it allows users to think of their repositories as unordered collections of changes. More details can be found in Chapter 3.

1.1.2 Haskell’s Type System

We assume the reader has a basic familiarity with functional programming and the Haskell language specifically. For more details about the language features that will be discussed in this document please see Section 2.2 and Appendices A and B.

Haskell’s type system is based on Hindly-Milner type checking [Pey03]. The GHC implementation of Haskell uses a modified version of the Damas-Milner type checking algorithm [VWP06]. In fact, GHC contains many extensions compared to the language specification for Haskell [Pey03] and it is no coincidence that GHC is used as the compiler for Darcs. Many of the extensions implemented by GHC are of great value for real-world Haskell programming.

1.2 Motivation

The use of version control systems (VCS) seem to be a common practice for software projects these days as most projects use some form of version control. Example version control systems include Subversion (SVN) [Tig09], Concurrent Versions System (CVS) [Fre09], Git [Tor09], Darcs [Rou09a], BitKeeper [Bit09], Monotone [Mon09], Visual SourceSafe [Mic09], and many others.

A VCS plays a support role in a project. That is, the VCS used by a team of software developers supports the primary task of software development. For this reason it is important that the VCS be reliable and robust, otherwise the software developers could lose time dealing with their tools instead of working on their primary task of software development.

Robustness has always been important for Darcs and it has motivated the Darcs project to try new things, such as moving the implementation language from C++ to Haskell as explained by Roundy [Sto05]:

It is a little-known fact that the first implementation of Darcs was actually in C++. However, after working on it for a while, I had an essentially solid mass of bugs, which was very hard to track down.

While Darcs has a test suite that continues to grow in size and comprehensiveness, it does not provide a total solution for ensuring the level of quality assurance that users demand. The number of bugs in Darcs, despite testing, is a strong motivating factor in our decision to incorporate more proof techniques in our quality assurance process.

1.3 Structure of this document

This document introduces the related work in Chapter 2 in the areas of automated invariant checking and encoding invariants in Haskell programs. In Chapter 3 we will give the necessary background for understanding several key invariants of Darcs. The tools and abstractions we use to represent these invariants, along with real examples are given in Chapter 4. Our analysis of the work, with discussion is found in Chapter 5. Finally we give a closing statement in Chapter 6.

Chapter 2 Related Work

In this chapter, we present work related to this thesis. A brief survey of the version control landscape is given in Section 2.1. An overview of type-based proofs as well as proof-carrying types can be found in Section 2.2.

2.1 Version Control Systems

Version control systems (VCS) are used by many software developers, projects and organizations. The primary feature offered by VCS software is the ability to track modifications to a collection of documents, usually program source code. Typically users are allowed to make their modifications independently and then share the modifications. Common VCS operations are covered in Section 2.1.1. A common classification among VCS is whether the modifications are shared in a distributed or centralized fashion. This distinction and where Darcs fits is covered in Section 2.1.2.

2.1.1 Commonly Supported Features

Every VCS has a notion of modification although different terminology is often used such as change, patch, or revision. The VCS stores a collection of documents along with the history of modifications in what is known as a repository.

The first step for using a VCS is usually to get a copy of a repository where the user can make modifications. Once modifications have been made, the VCS requires the user to record, or commit, the modifications. Doing so creates an entry in the history that contains the changes and usually a description entered by the user. After making and recording modifications users will often need to share their work with others who have a copy of the repository. The different ways of sharing are covered in the next section.

An important feature of most VCS is that of branching and merging. Creating a branch means making a copy of the repository which diverges from the original repository. In a software development project this might be done to facilitate the design and development of an experimental new feature while applying bug fixes to a stable version. Following this example, once the new feature is complete we would like to merge the two repositories so that we are left with one repository with both the completed new feature and the bug fixes to existing functionality. How branching and merging function also depends on the distinction between centralized and decentralized version control.

2.1.2 Centralized and Decentralized Version Control

How to share the changes, the ways in which they can be shared, and the order that they can be shared varies between VCS. Many VCS require that there is a central repository which collects all the changes and users connect to it to share and receive changes. Some VCS allow changes to be shared directly between copies of the repository in a decentralized fashion.

Well known examples of centralized VCS include, Subversion (SVN) [Tig09], Concurrent Versions System (CVS) [Fre09], Perforce [Per09], and Visual SourceSafe [Mic09]. Each of these VCS operate in client-server manner. The central repository is the server and each user has a client repository which communicates only with the central repository.

Decentralized, also known as distributed, VCS allow repositories to communicate directly removing the client and server distinction found in centralized VCS. Well known examples of decentralized VCS include, Darcs [Rou09a], Mercurial [Sel09], Git [Tor09], and Bazaar [Can09].

Modifications made with a centralized VCS may be stored in the order that they are committed to the central repository. This provides a natural linear progression of modifications and typically forces an implicit dependency between the modifications. Generally, new modifications must be made on top of all previous modifications. For example with SVN, users typically must update their local repository with modifications from the central repository before committing new changes.

With decentralized VCS the task of sharing changes becomes more complex as it is often equivalent to merging two repositories. For example, in the Darcs data model each copy of a repository is considered a branch and every time patches are shared it is equivalent to a merge in the SVN data model. In fact, these spontaneous branches set Darcs apart even within the category of decentralized VCS. Other decentralized VCS, such as Git, store modifications in a specific order whereas Darcs allows the order of modifications to be reordered according to the rules of Patch Theory discussed in Chapter 3.

2.2 Type Level Proofs

Although our implementation work is done inside of Darcs, our focus is not on the VCS aspects. Instead we are focused on using the type system as theorem prover and proof assistant. We discuss Haskell based type level proofs in Section 2.2.1. Briefly we discuss type system based proofs in mainstream languages and dependently typed languages in Section 2.2.2.

2.2.1 Haskell

Here we focus on proofs and proof techniques based in Haskell’s type system. Much of the research in Haskell that uses the type system for proofs centers around the use of type classes. This may be due in part to how long type classes have been available in Haskell and their standardization. More recent work in this area has demonstrated the power of Generalized Algebraic Data Types (GADTs). Appendix B contains a brief overview of GADTs and examples involving GADTs can be found in Chapter 4.

Language Features

The Haskell programming language [Pey03] specifies Hindley-Milner type inference and checking. Hindley-Milner type inference combined with type classes, nested types and recursive types gives Haskell programmers a plethora of interesting and useful idioms and techniques. Some of the techniques and idioms discussed in the research allow the Haskell type checker to serve as a proof assistant at compile time. In addition to the features above, we focus on several other features supported by the Haskell compiler GHC [GHC09c]:

- Generalized Algebraic Data Types (GADTs), developed by Xi et al, Jones et al, and Cheney and Hinze [XCC03,PVWW06,CH03];

- Existentially quantified types [LO94], commonly referred to as existential types, explained in Appendix A, and;

- Phantom Types [LM99].

We have two main uses for existential types. First, we borrow the idea of branding [KS07] when we need a type that is distinct and; second, to express certain type relations in our data types without exposing the exact types in the type of the data structure.

Both existentially quantified types and phantom types are implied by using GADTs, but our usage of them is important enough to warrant introducing them separately.

Some authors, such as Baars and Swierstra, have used the term witness type to refer to a type that serves as a witness of a proof. For example the type could represent a proof that two types are equal [BS02]. We adopt this terminology in our work.

Witness types are chiefly useful to us as a means of ensuring certain invariants are preserved. In the case of Darcs we would like to be able to change the semantics, fix bugs or refactor the code and always know that the properties of Patch Theory, such as those discussed in Chapter 3, have been respected.

Peyton-Jones et al [PVWS07] extended the type system used by GHC to handle arbitrary rank, which leads to so called “sexy types.” Sexy types include higher rank polymorphism and existential types. Additionally, sexy types give us precisely the power we need to express run-time invariants through the type system as demonstrated by Shan [Sha04]:

… skillful use of sexy types can often turn what is usually regarded as a run-time invariant into a compile-time check. To implement such checks is to reify dynamic properties of values as refined distinctions between types. These distinctions in turn increase the degree of heterogeneity among types in the program.

Hinze shows that higher rank types can also be used to enforce a wide variety of invariants in data types [Hin01].

Using existential types Kahrs shows us how to encode the invariants of red-black trees [Kah01]. The existential types are used in the data type declaration to control unification of phantom types. We use a similar means to control unification of phantom types in our implementation. As Kahrs mentions, using phantom types in this way has the advantage that it can be removed later, once the code is known to preserve invariants and the phantom types add no run-time cost.

Type Class Based

Using type classes it is possible to implement a statically checked run-time test for type equality using witness types as detailed by Baars and Swierstra [BS02]. Implementing type equality this way does have one draw back as demonstrated by Kiselyov [Kis09], namely it is possible to weaken the type system through malicious type class instances. In Section 5.2.3, we discuss a similar problem that threatens the context equality that we use in the Darcs implementation.

Type classes, especially when combined with functional dependencies, allow for computations in the type system as explained by Hallgren [Hal01]. Any purely functional computation that terminates appears to be possible at the type level. For example, basic arithmetic on type level natural numbers is relatively easy to express. One drawback to this variety of type level proof is that by enabling this level of computation in the type system we lose the property that type checking will always terminate.

Kiselyov and Shan [KS04] provide a powerful example of how Haskell’s type classes can be used to turn values into types and back again. These authors give a way to reify any value that can be serialized into the type system. A major drawback of using this approach is that it adds run-time overhead. Converting back and forth between types and values requires processing overhead and there is also the overhead of passing run-time data for each type. The run-time overhead can be proportional to the “size” of the type [McB02]. Our implementation is already burdened by performance issues and so we seek to avoid adding any additional run-time overhead.

Silva and Visser [SV06] give another great example of Haskell programmers reaching for more static safety by exploiting the types system and HList [KLS04]. As Silva and Visser describe their work:

We explain how type-level programming can be exploited to define a strongly-typed model of relational databases and operations on them. In particular, we present a strongly typed embedding of a significant subset of SQL in Haskell. In this model, meta-data is represented by type-level entities that guard the semantic correctness of database operations at compile time.

By using HList, values with heterogeneous types may be stored together in a record, or list, of arbitrary size. While this is similar to our Directed Lists, see Section 4.6, we would like to place more constraints on our data types such as Hinze [Hin01] does and also not exposing the intermediate types of the elements in our directed types.

The libraries Dimensionalized Numbers [Den09] and Dimensional [Buc09] both take the approach of exposing extra information to the type system to achieve correct unit manipulations. In both of these cases the correctness the authors want to model is that arithmetic operations should respect the physical units involved.

GADT Based

Eaton [Eat06] gives a clever way to expose matrix dimensionality to the type system so that only operations which respect the dimensions of arrays and matrices statically are allowed. This approach is interesting because it is not unlike our own and yet only uses GADTs incidentally. Meaning, it is not a core requirement for their approach. As the author says the technique is to “expose certain properties of operands to a type system, so that their consistency could be statically verified by a type checker, then we would be able to catch many common errors at compile time.”

The presented approach uses type classes and the type reflection technique presented by Kiselyov and Shan [KS04]. Similar to our experiences, this author also points out that doing so increases the type signatures in an unpleasant way. A major difference between our implementation and that of Eaton is the use of functional dependencies [Jon00]. Functional dependencies allow the programmer to place constraints on the types used in a type class. If our approach relied on type classes we would probably use functional dependencies as well. A minor difference between our approaches is that while we use data types with existentially quantified types as wrappers so that we may have existential types result from functions Eaton prefers to use CPS transformation. This transformation leads to equivalent types [Sha04, Eat06]. Eaton also notices how type checking is now so difficult as to be a burden to the programmer and comments that data flow analysis may be able to improve type check error messages. Such an improvement by any means would be very welcome.

Greif [Gre08] applies the same data declarations that we use for directed lists, Section 4.6, to implement Thrists, or type threaded lists. Although this work is unpublished, according to the author it is inspired by the brainstorming session at Haskell’05 workshop in Tallinn. This session is where our directed lists were born. Greif provides a library for Thrists in both Ωmega and Haskell, with several example applications including parsers and interpreters.

Faking Dependent Types

Although we do not use a dependently typed language for our implementation, we do approximate, or simulate, dependent typing within Haskell to achieve some of our goals. McKinna [McK06] explains the benefits of dependently typed programming:

Type systems without dependency on dynamic data tend to satisfy the replacement property—any subexpression of a well typed expression can be replaced by an alternative subexpression of the same type in the same scope, and the whole will remain well typed. For example, in Java or Haskell, you can always swap the then and else branches of conditionals and nothing will go wrong—nothing of any static significance, anyway. The simplifying assumption is that within any given type, one value is as good as another. These type systems have no means to express the way that different data mean different things, and should be treated accordingly in different ways. That is why dependent types matter.

This observation exactly characterizes why Darcs became fragile and why we seek to simulate dependent typing. Replacing patches in, concatenating and rearranging patch sequences was always statically valid even when it would result in corrupt repositories. For this reason we sought out techniques that would give us the benefits of dependent typing in Haskell.

Using type level numerals, Fridlender and Indrika [FI00] show a simple way to work around the lack of dependent types in Haskell. The main example given allows us to make a version of the standard Haskell function zipWith, which is referred to as zipWithN, that is type indexed by a type level numeral. The numeral represents the number of parameter lists passed to zipWithN. This approach is representative of simulating dependent typing with Haskell. One type is created for each value, in this case type level numerals. To simulate the values inhabiting a type we can make each type an instance of the same type class. Thus the values correspond to Haskell types and the types correspond to Haskell type classes.

McBride [McB02] explores the simulation of dependent typing in Haskell. This paper explains various tricks to simulate dependent typing and how they are related. It also clearly explains how type classes allow the programmer to simulate some type families. He also comments on the limitations of type inference and what can be accurately encoded when using nested types such as those used by Okasaki [Oka99]. McBride warns us that run-time overhead of type class heavy techniques may be proportional to the size of the type signatures. In the GHC implementation this results from implicit passing of type dictionaries for functions that rely on type classes.

Guillemette and Monnier [GM08] found that it was possible to represent subset and superset relationships in the type system using GADTs. This also required a way to implement type equality as a run-time test. Their techniques are very similar to ours even though the domain is very different, a type-preserving compiler. They use type level Peano numbers to represent de Bruijn indices.

Kiselyov and Shan [KS07] tag, or brand, values with types that represent certain capabilities. For example, by creating a new list datatype where the type of the list is parametrized by a brand we can statically enforce non-emptiness of lists. The brand is part of the type of the list and acts as a proof of a capability such as whether the list is empty or non-empty. The work done here is in OCaml but applies equally well to Haskell and can be used even without dependent typing, although this requires that we use a trusted kernel of code which may do run-time checks to generate the correct branding. Once we have the branding in place the type system can do the verification, thus we can restrict our intensive verification to just the trusted kernel. This is essentially the approach we have taken for directed lists. This work is also similar to the examples of dependent typing given by Xi [XP98]. Xi uses restricted dependent types to remove array bounds checking.

By using “nested types, polymorphic recursion, higher-order kinds, and rank-2 polymorphism,” Okasaki [Oka99] is able to encode vector and matrix dimensions into types. This encoding ensures that matrix and vector operations can be statically checked for correctness.

2.2.2 Non-Haskell

Proving properties and carrying the proofs with types is not limited to Haskell. Skalka and Smith [SS00] propose a type system for statically enforcing security using the JVM security model. The type system carries proofs about the code as it is compiled. For this to work static type inference is required, this means that their static security does not work without a modified Java compiler.

Java is not the only mainstream programming language that is receiving attention from type based proofs. Kennedy and Russo [KR05] have found a way to bring the power of GADTs to C# and Java. Hopefully in the future many of the approaches discussed here will apply in mainstream languages. Before we can freely use GADTs in C# the compiler would need to be augmented with the special type checking rules described.

Xi and Scott [XS99] make a very good survey of work done in dependent typing, give examples where it helps and explain why it is an important subject.

One example of using language features similar to GADTs arises in a dependently typed variant of ML known as Dependent ML. Chen and Xi [CX03] use Dependent ML to implement type correct program transformations.

Sheard [She05] explains, with examples, a Haskell-like language known as Ωmega. Ωmega has GADTs but unlike Haskell it offers strict evaluation and features designed to ease using the type system for proofs. Unfortunately we could not use Ωmega without a substantial rewrite of Darcs. It also not clear that Ωmega is ready for real-world use. We hope that the techniques we demonstrate help answer a question posed by the author about the way in which other features such as rank-n polymorphism magnify the benefit of GADTs.

Chapter 3 Data Model and Invariants

Now we turn to establishing the theory underlying Darcs. We assume the reader has basic familiarity with the use of version control systems. Here we describe the fundamentals of Patch Theory [Rou09c] as it relates to version control. Not all of Patch Theory has been made rigorous and precise at this time although Roundy has made several presentations on Darcs that include discussions of Patch Theory [Rou06a, Rou06b, Rou08]. We begin with some definitions and then discuss several properties of patch manipulation.

3.1 Elements of Patch Theory

In this section we make precise terminology that is commonly used in the Darcs community.

Patch Theory is designed to allow users to independently change their data and then share those changes. A patch is a way of recording, storing and communicating changes. Before we give a precise definition of patch we define some of the important concepts in Patch Theory.

Definition 3.1.1. A repository consists of a sequence of patches and a working copy.

The sequence of patches in the repository represents a set of changes. We want the user to work with patches in such a way that the set of changes define the exact contents of the repository and allow the user to think in terms of sharing changes between repositories. For example, we would like for a merge of two repositories to be simply the union of their sets of changes.

Each repository may have several states. For example, the state that results from applying all of the patches in the repository is called the pristine state.

Definition 3.1.2. A repository state is a collection of directories, files and the contents of those files.

We give a special name to the pristine state as it gives us a convenient way to discuss the effect of applying patches while ignoring any changes that have not yet been recorded by the user.

The working copy of the repository is where users do their work between version control operations. In Darcs the working copy is a directory storing the user’s files and data as the user currently chooses to see it and work with it. Example operations involving patches are removing patches, recording new patches or applying patches from a different repository and doing so will result in a new working copy corresponding to a new state.

Definition 3.1.3. A context is a sequence of patches that can be applied to the empty state. The empty state refers to an empty collection of directories and files.

We can now give a more precise definition of patch.

Definition 3.1.4. A patch is a concrete representation of a change made to the state of a repository. Each patch is a transformation on repository state, and must be an invertible transformation. Each patch also depends on a context as defined in Definition 3.1.3.

A few example patch types include, change to file contents, renaming a file, as well as file additions and deletions.

We will use bold capital letters (e.g. A, B) to refer to patches.

Each patch has exactly two contexts, the context required to apply the patch, the pre-context, and the context that results from applying the patch, the post-context.

Definition 3.1.5. The pre-context of a patch is the context that exists prior to the patch and is required to apply the patch. Similarly the post-context of a patch is the context that results from appending the patch to the pre-context.

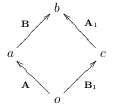

Lowercase italic letters will refer to contexts, and will be placed in the superscript position in order to describe the preand post-contexts of a patch, as in oAa . For example, if the repository has a context of o and the user then edits one file and records a new patch A, then the context might then be a. Thus, the user has created a patch with pre-context o and post-context a. To denote this we would write oAa , where a is equal to the context o with patch A appended to it.

A repository might contain two patches, oAa and aBb , in which case we could put them in a sequence and simply write, oAaBb. Note that since the post-context of A matches the pre-context of B we only write the context a once. Often the contexts may be understood and are omitted, as in AB.

3.2 Commute

When the result of composing two functions is the same regardless of composition order, the functions are said to be commutative. Since our patches contain a transformation of state, we would like to commute patches. Commutation of patches will give us a natural way to reorder sequences of patches and a way to implement merging of patches. If we have two invertible transformations of state, \(T_1\) and \(T_2\) such that

\[T_1 \circ T_2 = T_2 \circ T_1,\]

then we say that the functions T1 and T2 are commutative functions.

We must note that above, \(T_1\) and \(T_2\) are not patches because we have not associated pre- and post-contexts to them. What we mean is that we have two functions with domains and ranges such that they can be composed either way and the resulting transformation of state is the same.

To construct patches from \(T_1\) and \(T_2\) we associate with each a pre-context. Suppose the patch A was created from a repository of context o, from the transformation \(T_1\), then let A have pre-context o and let the resulting post-context be a. That is, we have constructed a patch oAa . Similarly, suppose the transformation \(T_2\) is then applied and a patch is created with pre-context a and post-context b, let this patch be aBb . So far we have constructed oAa and aBb from \(T_1\) and \(T_2\) in such a way that oAa and aBb are restricted versions of \(T_1\) and \(T_2\). That is, oAa and aBb have the same effect on state but may only be applied or composed in their respective pre- and post-contexts.

By construction, \(T_1\) and \(T_2\) are commutative functions and now we investigate what happens when we commute patches by exploring an example.

3.2.1 Example

To understand the difference between commuting functions and commuting patches, we will work through an example involving file renames and modifications to the contents of those files. This example shows how patches are transformed by commutation. Suppose we have a repository with two specific files named X and Y . We could then define the following transformations of state, which simply rename the files:

- rename Y to Z

- rename X to Y

- rename Z to X

Suppose also, that we make an edit to X and an edit to Y . Let us name these transformations in general as follows,

- \(R(x, y)\) = rename x to y

- \(E_1(x)\) = fixed but arbitrary edit to file x

- \(E_2(x)\) = fixed but arbitrary edit, different from E1 (x), to file x.

Note that in general \(E_1(x)\) and \(E_2(x)\) depend on the specific contents of the file x.

Using our files X and Y , we see that \(E_1(X)\) and \(E_2(Y)\) could be applied to the repository in either order. In other words, both \(E_1(X) \circ E_2(Y)\) and \(E_2(Y) \circ E_1(X)\) transform the repository in exactly the same way. This follows from the contents of X and Y being independent of each other.

Here we will introduce a new patch notation in this section only to make our example commutes more clear. In later sections we will switch back to our more abstract patch notation. Since each patch corresponds to a transformation of state, say T, with specific pre-context a and post-context b, we will denote this: a[[T]]b

As before we will omit the contexts when it is understood or unimportant. As we will see later, each commute introduces a new pair of patches and this new notation frees us from the task of distinctly naming each patch. This notation also allows us to focus on the state transformation and contexts of the patch.

Our first example uses the patch sequence, \(o[[E_1(X)]]a[[R(X,Y)]]b\) . We are assuming that the context o ensures we have a file X but that no file named Y exists. This sequence of patches edits file X and then renames X to Y .

If we step back and view the above sequence of patches as a composition of transformations, \(R(X,Y) \circ E_1(X)\) (The order of function composition is the reverse of the order for patch sequences), then we see that these transformations are not commutative because it does not make sense to edit the file X after renaming X to Y . The reason is simple, the file X would no longer exist when we try to apply the edit transformation.

Instead of trying to commute \(E_1(X)\) with \(R(X,Y)\), we could consider \(E_1(Y) \circ R(X,Y)\). We arrive at this composition by observing that once X has been renamed to Y we would like to apply our edits to the file Y instead of X. This new composition of transformations would give us the same state as the original composition but with the order of operations reversed. We can apply this idea to swapping the order of patches as well.

Now we swap the order of the patches and reason about the effect on the transformations stored inside the patches,

\[o[[E_1(X)]]a[[R(X,Y)]]b \rightarrow o[[R(q,r)]]c[[E_1(s)]]d,\]

where q, r, and s are placeholders that we will reason about now. A first guess at the values for q, r, and s might be q = X, r = Y , and s = X, but this does not take into consideration the reordering of the operations. When we commute these patches, we must consider whether the transformation \(E_1(X)\) affects the transformation \(R(X,Y)\). Renaming a file is independent of the contents of the file so we see that the transformation \(R(X,Y)\) should not be affected and thus, q = X and r = Y . When we consider if \(E_1(X)\) is affected by \(R(X,Y)\), we realize that the edit should be applied to Y instead of X. After the reordering we are renaming the file before applying the edit, and this means that we must now apply the edit to the new name of the file. Therefore, after the rename of X to Y the edit to X should be applied to Y and we see that s = Y . Thus we get the following result,

\[o[[E_1(X)]]a[[R(X,Y)]]b \rightarrow o[[R(X,Y)]]c[[E_1(Y)]]d.\]

Finally, notice that the contexts of the patches are different before and after reordering the patches. Context is defined to be a sequence of patches and so reordering the patches changes the sequence. Intuitively, we want the context b to be equivalent to the context d, but we save this discussion for Section 3.2.2.

Now we turn to a slightly bigger example. This time we assume that the context of the repository is such that the files with names X and Y exist but there is no file named Z.

Consider the patches o [[E1 (X)]]a and a [[E2 (Y )]]b . Similarly, suppose we create the patch sequence b [[R(Y, Z)]][[R(X, Y )]][[R(Z, X)]]e that swaps the file names of X and Y . For the remainder of this example, we will omit the contexts of the patches, as we are chiefly interested in the effect of commutation on patches. In the following sections we will examine the effect that commute has on context. This gives us a patch sequence,

\[[[E_1(X)]][[E_2(Y)]][[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]].\]

In English, \([[E_1(X)]][[E_2(Y)]]\) modifies the file named X and modifies the file named Y , while \([[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]]\) swaps the names of X and Y . Therefore, this sequence modifies X, modifies Y and finally swaps the file names X and Y .

First we will commute \([[E_2(Y)]]\) all the way to the right and then commute \([[E_1(X)]]\) to the right. When we commute \([[E_2(Y)]]\) with \([[R(Y,Z)]]\) we get \([[R(Y,Z)]][[E_2(Z)]]\), using the same reasoning as the previous example.

Showing this commute as one step we write,

\[[[E_1(X)]][[E_2(Y)]][[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]]\] \[\rightarrow [[E_1(X)]][[R(Y,Z)]][[E_2(Z)]][[R(X,Y)]][[R(Z,X)]].\]

Next we commute \([[E_2(Z)]]\) with \([[R(X,Y)]]\). This time the commute is trivial since the transformations are independent of each other and results in,

\[[[E_1(X)]][[R(Y,Z)]][[E_2(Z)]][[R(X,Y)]][[R(Z,X)]]\] \[\rightarrow [[E_1(X)]][[R(Y,Z)]][[R(X,Y)]][[E_2(Z)]][[R(Z,X)]].\]

When we commute \([[E_2(Z)]]\) and \([[R(Z,X)]]\) the outcome is similar to the first commute, and we need to update the transformation in the patch \([[E_2(Z)]]\) to modify the file X. The resulting sequence is,

\[[[E_1(X)]][[R(Y,Z)]][[R(X,Y)]][[E_2(Z)]][[R(Z,X)]]\] \[\rightarrow [[E_1(X)]][[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]][[E_2(X)]].\]

When we commute \([[E_1(X)]]\) through the sequence there is again only two commutes where we update the state transformation. After doing all the commute steps we would have the following sequence,

\[[[E_1(X)]][[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]][[E_2(X)]]\] \[\rightarrow [[R(Y,Z)]][[E_1(X)]][[R(X,Y)]][[R(Z,X)]][[E_2(X)]]\] \[\rightarrow [[R(Y,Z)]][[R(X,Y)]][[E_1(Y)]][[R(Z,X)]][[E_2(X)]]\] \[\rightarrow [[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]][[E_1(Y)]][[E_2(X)]].\]

To summarize, we started from this sequence,

\[[[E_1(X)]][[E_2(Y)]][[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]],\]

and after several commutation steps we arrived at the sequence

\[[[R(Y,Z)]][[R(X,Y)]][[R(Z,X)]][[E_1(Y)]][[E_2(X)]].\]

The two sequences are different operationally but they modify the state of the repository in the same way. In particular, notice that we apply the transformation \(E_1(x)\) to Y after the reordering, but before the reordering it was applied to X. The patch containing the transformation \(E_2(x)\) underwent a similar modification.

If we had simply treated the state transformations as commutative functions, then we would have an invalid composition of transformations. After all of the reordering in this example E1 (X) would still be a transformation on the contents of a file with name X even though the file with name X was renamed to Y . Thus, E1 (X) would modify the wrong file contents.

In the first example we saw that patch commutation always modifies the context of the patches and only some of the time changes the state transformation. Also, each new commutation step gives a new sequence yet each sequence defines the same final repository state.

These examples were designed so that all of the patch commutations would succeed, but in general commutation of two patches may not be possible. For example, it does not make sense to commute a patch that creates a file with a patch that modifies that file. We also do not attempt to define patch commute for patches that are not adjacent in a patch sequence.

3.2.2 Abstract Interface

The example in the previous section shows that if we commute state transformations the resulting sequence of transformations is not guaranteed to produce the correct state. Fortunately, the example did illustrate that we can derive new state transformations, and hence new patches, while reordering adjacent patches. This principle is the intuition behind the patch commute operation.

We give the following abstract definition of commute, similar to that found in the Darcs manual [Rou09b].

Definition 3.2.1. For two patches oAa and aBb we define an operation, which may fail, called commute such that if oABb commutes to the patches oB’A’b, then we write oABb \(\leftrightarrow\) oB’A’b.

While the details of the Darcs commute implementation are beyond the scope of this document, we assume the Darcs patch commute is implemented in such a way that properties such as the following hold.

Property 3.2.1. Patch commute is self-inverting. For example, if AB \(\leftrightarrow\) B’A’, then B’A’ \(\leftrightarrow\) AB.

Property 3.2.2. Patch commute preserves the pre-context and gives an equivalent post-context of the sequence when adjacent patches are commuted. For example, if aAbBc \(\leftrightarrow\) xA’yB’z, then it must be the case that a = x (This is true because the patch sequence to the left, if any, has not been altered), and we define z to be equivalent to c, while the relationship between b and y is unknown. Intuitively we want the sequence that results from commute to define the same repository state.

We want the above properties so that patch commute will be an equivalence relation on sequences of patches. For patch sequences that are related by some number of commutes we write \(\leftrightsquigarrow\) and say “can be commuted to.” For example, if AB \(\leftrightarrow\) A’B’, then AB \(\leftrightsquigarrow\) B’A’ after just one commute.

For the relation \(\leftrightsquigarrow\) to be an equivalence relation, it must satisfy the following [Rot02] for all patch sequences x, y and z:

- \(x \leftrightsquigarrow x\);

- if \(x \leftrightsquigarrow y\) then \(y \leftrightsquigarrow x\);

- if \(x \leftrightsquigarrow y\) and \(y \leftrightsquigarrow z\) then \(x \leftrightsquigarrow z\).

Here we take the property that the relation \(\leftrightsquigarrow\) forms an equivalence relation for granted much like we assume here that the Darcs commute implementation is correct. That is, the specification of Darcs commute, eg., Patch Theory, specifies that the relation \(\leftrightsquigarrow\) must be an equivalence relation and it would be a defect in the Darcs implementation if it were not. For this reason, we do not give a proof here. Providing a rigorous proof that \(\leftrightsquigarrow\) forms an equivalence relation is left as future work.

Every equivalence relation partitions elements into disjoint sets known as equivlance classes [Rot02]. Here the equivalence classes are sequences of patches that define the same final repository state, but this is not to say that all sequences that define a common final repository state are in the same equivalence class.

In Definition 3.1.3 we said that a context is a sequence of patches. Now that we can use the relation \(\leftrightsquigarrow\) to talk about equivalent sequences of patches we may also talk about equivalent contexts. By equivalent context we mean sequences of patches that are equivalent under the relation \(\leftrightsquigarrow\). Equivalent contexts should define identical repository states. To fully define equivalent contexts we also need to consider inverse patches in the next section. Also, we do not distinguish in our notation between contexts that are identical and contexts that are equivalent.

In summary, we see that when it is possible to commute patches, the pre- and post-contexts of the patch sequences are equivalent and the operation of commutation results in new patches that are semantically linked to the original patches.

3.3 Inverse Patches

The idea of inverse patches is borrowed from the Darcs manual [Rou09b]. The inverse of patch B is denoted \(\mathbf{\bar{B}}\), and has the property that the state transformation in \(\mathbf{\bar{B}}\) is the inverse of the state transformation in B. We define the pre-context of \(\mathbf{\bar{B}}\) to be the same as the post-context of B. The composition \(\mathbf{\bar{B}}\)B results in a context that defines the same repository state as the pre-context of B. For this reason, we define the post-context of B to be equivalent to the pre-context of B. In our notation we write, oBb and b\(\mathbf{\bar{B}}\)o by the following property.

Property 3.3.1. Let oBb be a patch and let x\(\mathbf{\bar{B}}\)y be the inverse patch. We define o to be equivalent to y and b to be equivalent to x.

The intuition behind this property is that each patch has an inverse patch which nullifies, or undoes, the effects of the patch including resetting to an equivalent context.

3.4 Equality

The properties of patches give rise to the following result which is useful for determining when contexts are equivalent.

Property 3.4.1. Given two patches, xAy and uBv,that contain the same transformation of state, T , then x is equivalent to u, if and only if, y is equivalent to v.

Property 3.4.1 is useful for proving when contexts are equivalent after performing a series of commutes, or when examining two patches that start or end in the same context.

Note that given two arbitrary patches xAy and uBv , Property 3.4.1 does not apply, unless A and B share the same transformation. Without this extra condition the states defined by y and v may be the same without the contexts being equivalent.

3.5 Merge

Here we turn to the theory required to merge two sequences of patches. Property 3.5.1 demonstrates how commutation allows us to transform patches by way of commute so that patches that were initially in different contexts may be merged into a sequence. The following property corresponds to Theorem 2 of the Darcs manual [Rou09c].

Property 3.5.1. Given four patches oAa , aBb , cA’b , and oB’c then oAaBb \(\leftrightarrow\) oB’cA’b, if and only if, a\(\mathbf{\bar{A}}\)oB’c \(\leftrightarrow\) a\(\mathbf{\bar{B}}\)bA’c.

As we will see later, a valuable property of merge is that it is symmetric. We can see that merge is symmetric by examining how we would use Property 3.5.1 in practice. By this property, we can merge two patches which have the same pre-context and put them into a sequence assuming that they may be commuted.

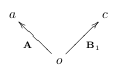

Using the same patches as the statement of Property 3.5.1, we could visualize the patches as being parallel (We consider patches that share a pre-context to be parallel whereas patches that share a post-context are said to be anti-parallel):

Starting with either oAa or oB’c we could arrive at two different sequences that share equivalent pre- and post-context. We achieve this with the following steps:

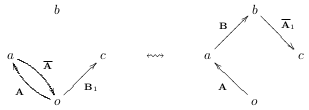

We start by applying the inverse patches a\(\mathbf{\bar{A}}\)o and c\(\mathbf{\bar{B'}}\)o respectively and get oAa\(\mathbf{\bar{A}}\)o and oB’c\(\mathcal{\bar{B'}}\)o, corresponding to:

Apply oB’c to the end of the sequence oAa\(\mathbf{\bar{A}}\)o and then use Property 3.5.1 to get oAa\(\mathbf{\bar{A}}\)oB’ \(\leftrightsquigarrow\) oAaBb\(\mathbf{\bar{A'}}\)b, corresponding to:

Next, apply oAa to the end of the sequence oB’c\(\mathbf{\bar{B'}}\)o and again then use Property 3.5.1 to get oB’c\(\mathbf{\bar{B'}}\)oAa \(\leftrightsquigarrow\) oB’cA’b\(\mathbf{\bar{B}}\)a corresponding to:

Remove patches b\(\mathbf{\bar{A'}}\)c and b\(\mathbf{\bar{B}}\)a from the right end of their respective sequences. This leaves us with two different sequences of patches having equivalent pre- and post-contexts. By being explicit about the context of the patches, we see that we are left with oAaBb and oB’cA’b. We can also see the symmetry of merge visually:

The symmetry of merge is important because it means patches can be merged in any order and the resulting repository will have an equivalent context which in turn means it will have the same state. The symmetry of merge is what allows us to realize our goal of letting users treat a repository as an unordered collection of changes.

3.6 Summary

The core of Darcs relies on manipulating patches in several key ways:

- There is a commute function that takes two patches and either fails or returns two new patches which correspond to similar transformations of state but have slightly different pre- and post-contexts.

- By commuting patches in sequences we are able to relax the definition of context to equivalent contexts.

- The pre- and post-context of each patch must be carefully tracked to avoid data corruption. This includes contexts which only exist temporarily, or theoretically, as patch sequences are commuted.

As the example commute in Section 3.2.1 shows we cannot just apply patches whenever the state matches the domain of the patch’s state transformation. Doing so could lead to different results depending on the order the patches are applied in. To avoid data corruption we use commute when we need to reorder patches. The goal of our work is to make sequence manipulations safe and give static guarantees about that safety. Here, “safe” means that the contexts are always respected and data corruption due to applying patches in the wrong context is avoided.

In the next chapter we will re-examine the properties defined in this chapter to see which ones may be statically enforced by the Haskell type checker.

Chapter 4 Checked Invariants

Now that we have established the most fundamental properties and constraints of Darcs patch manipulation in Chapter 3, we will show our way of encoding the invariants into Haskell types.

Our goal is to ensure the properties from Chapter 3 are checked at compile time. We also seek to find a balance between spending all of Darcs development time on correctness versus writing new code and adding useful features. An overview of the properties we cover is given in this table:

| Property | Description | Discussed in Section |

|---|---|---|

| Definition 3.1.4 | Patch | Section 4.5 |

| Definition 3.1.5 | Pre- and post-context | Section 4.5 |

| Definition 3.2.1 | Commute | Section 4.7 |

| Definition 3.1.1 | Repository and patch sequence | Section 4.8 |

| Property 3.5.1 | Merge | Section 4.9 |

| Property 3.4.1 | Patch equality | Section 4.10 |

Throughout this chapter we make use of existentially quantified types and Generalized Algebraic Data Types (GADTs). A brief introduction to existential types is given in Appendix A. A brief introduction to GADTs is given in Appendix B.

4.1 Sealed Types

One technique that we rely on heavily is the use of existentially quantified types. We use existentially quantified type variables in several different ways. The most basic appears in our Sealed data type.

Existentially quantified types give us a way to mark some of our types as distinct from all other types. We use a special data type, called Sealed, to hold the existentially bound types. Using the GADT extension it is defined as follows:

Using the Sealed data constructor the type parameter x is hidden inside the Sealed type. The only thing we can currently recover about the existentially quantified type x is that it exists. This means that when we pattern match on a value of type Sealed:

The type system must invent a new type for x, referred to as an eigenvariable, inside the pattern match of f. Again, this eigenvariable for x is distinct. The only type it is equal to is itself. We also cannot expose the eigenvariable to a higher level. Although we can pass the eigenvariable to a polymorphic function.

4.2 Witness Types

We consider a witness type to be a type that demonstrates that a particular property is true. The witness acts as evidence of the property.

We use this idea to represent a proof of type equality. The following EqCheck type represents an equality check between two types, a and b, it is written in the GADT notation, explained in Appendix B:

If the types a and b are equal, then we may use the data constructor IsEq, otherwise we must use NotEq. At the end of the next section we give an example of how this type can witness a proof.

4.3 Phantom Types

A phantom type is a type that has no value associated with it, such as phantom in the following:

Above, the type variable phantom has no value associated with it on the right-hand side of the equal sign. This means that whenever we construct a value of type P we may also give a type for phantom. Since phantom has no value associated with it, it is free to unify with anything in the type system.

For example each of the following is valid, even within the same program:

We could imagine each of the above examples as branding the value P 5. In other words, one application of phantom types is that they allow us to embed extra bits of information in our types. In particular we want to attach evidence, or proofs, to our types. Which is to say, we want to associate the phantom type with a witness type.

4.4 Example

We would like to combine witness types and phantom types so that our proof carrying types appear as phantom types. A partial justification for this is that associating a full patch sequence with each context type would result in an intolerable run-time overhead.

Relying on the accuracy of witness types when they appear as phantom types can be problematic; when a value is constructed the type of a phantom is essentially arbitrary. To be able to rely on the information embedded in phantom types we need ways to control the type unification.

One approach is to hide the data constructors and only expose specialized functions for constructing the datatype. These constructors are often known informally as smart constructors. In our case, we might create the mkIntP smart constructor which only allows for the construction of values having the type P Int:

We could make a similar smart constructor for values of type P String:

This works well as long as set of tags is either completely open or closed to a small set of types. The reason is simple, either we provide full access to the data constructor or we make a smart constructor for each allowed tag. Suppose instead that there are specific rules about what is a valid tag but the set of allowed tags is unbounded. Now we need a new approach.

In the previous section we defined the EqCheck a b witness type. Now we combine the concept of witness types with existentially quantified types.

As an example, suppose we have another data type E, which uses existential quantification on the type variables a and b:

To construct a value of type E we must supply three values, a value of type a, b, and an EqCheck a b value. The type of the E constructor forms a relationship between the first two input values and the EqCheck a b value. To illustrate this point the following is valid:

While, this example is invalid:

The second example could be made valid by using NotEq instead as follows:

When we pattern match on a value of type E we can use the witness type EqCheck to gain information about the existentially quantified types a and b:

At the point of pattern matching in test we know more than just which data constructor of EqCheck was used, we also recover information about the type equality status of a and b. In the first pattern match the IsEq constructor tells the type checker that a and b are the same type even though a and b are existentially quantified. Without this extra information, the type system would treat a and b as distinct types.

In this example our witness type provides a proof that is stronger than a run-time check. Here, the type checker is able to see that the types are the same. In our example no run-time check is needed and therefore no cast of a to b is needed, but in some cases it can still be useful. The complications of providing a run-time type equality is discussed in Section 5.1.

When a run-time check is desired to determine type equality we also need a dynamic cast [BS02]. In such a case, a value of type EqCheck a b can be useful for passing around the evidence from the equality check. The key point is that by pattern matching on the IsEq data constructor we inform the type system that the two types a and b of the EqCheck are equal. This allows us to use the IsEq data constructor as a first class proof of type equality at run-time.

4.5 Patch Representation

Before we examine how to represent patch contexts, we first look at how the transformations that make up patches are represented. We begin by looking at a simplified definition of the Prim data type in the Darcs implementation. This abstraction is the primitive representation that corresponds most closely to the Patch Theory discussed in Chapter 3.

data Prim where

Move :: FileName → FileName → Prim

DP :: FileName → DirPatchType → Prim

FP :: FileName → FilePatchType → Prim

ChangePref :: String → String → String → PrimHere we list all of the data constructors that appear in the Darcs source, except the Split data constructor which is omitted because it is obsolete. Each one is explained as follows:

Move: Represents a file or directory rename.DP: The given file name is either added or removed based on the value of DirPatchType.FP: The given file name is either added, removed, or modified based on the value of FilePatchType.ChangePref: Changes a preference setting for the repository.

The first patch property that we are concerned with is that patches have both pre- and post-context, described in Definition 3.1.5. To encode this in Haskell’s type system we use GADTs and phantom types to represent context for patches. Thus we have the following definition:

data Prim x y where

Move :: FileName → FileName → Prim x y

DP :: FileName → DirPatchType x y → Prim x y

FP :: FileName → FilePatchType x y → Prim x y

ChangePref :: String → String → String → Prim x yThe phantom types x and y correspond to pre- and post-context respectively. In our type encoding we are only concerned with contexts that are equivalent, and here we represent only equivalent contexts as described in Section 3.2.2. Each data constructor corresponds to a type of patch which does change the context and the phantoms express this transformation from x to y.

The main relationship which is expressed by our use of phantom types is that of how the context is changed by a patch or by a sequence of patches. Although this may seem like a simple relationship, the types that can be expressed this way are still quite helpful in constraining the possible operations and also useful as machine checkable documentation.

4.6 Directed Types

Darcs patches have a notion of transforming between contexts. This naturally leads us to container types that are “directed”, and transform from one context to another.

4.6.1 Directed Pairs

The simplest directed type is a directed pair.

Our definition of directed pairs uses the TypeOperators GHC extension that allows type constructors to be infix. We only use infix type constructors because we find them syntactically pleasing. We refer to :> as a forward pair.

In the above definition the types a1 and a2 are the element types in the pair. The forall keyword is used to make z an existentially quantified type variable. When two types are placed in a forward pair using :> part of each type must match. Suppose we had the two types, Either String Int and Int -> Bool, then we could create the type, (Either :> (->)) String Bool. Notice that the Int in both types gets hidden due to the existential quantification of z.

Using the Prim type we could store a pair of patches:

Much like our example in Section 4.4, we insert the patches into the forward pair as they are constructed. This acts to partially constrain the phantom types of the Prim type and also adds a relationship between the phantom types through the existential quantification in the directed pair.

We use existentially quantified types to represent context for two main reasons, a) contexts are implicitly stored by Darcs and, b) we need to work with an unbounded number of distinct contexts. Either of the previous two points means we would not be able to manage an explicit type for each context. Thus, we are using the type system to do a great deal of the work for us. We do use one concrete type as a context. We use the Haskell type unit, or (), as the type of the empty repository.

4.6.2 Forward Lists

We create the forward list type, which can be used to store types that are parametrized over exactly two other types. One such type is Prim, another suitable type for forward lists are functions. For concrete examples using functions see Appendix C.

In the definition above, a, is the element type stored in the list and x and z are types which enforce an ordering on the elements of the list.

The constructor NilFL represents the empty forward list. Because an empty forward list has no elements and carries no transformation we give it the type FL a x x. The constructor (:>:), takes some element with type parameters x and y, a forward list with the same element type but type parameters y and z, and produces a forward list with type parameters x and z. The type y is hidden inside the forward list as an existentially quantified type variable. This works for storing elements but it does make some operations tricky as we will see later.

An example of a forward list holding values of type Prim:

The above sequence of patches would swap the names of the files X and Y. Once the list has been constructed if we try to reorder the elements we would get a type error. For example, this function would not be valid:

Once the list is created the context types become fixed. After that we can only put them into a forward list if we respect the relationships between the contexts.

4.7 Expressing Commutation

In Definition 3.2.1, we define commutation of patches as a partial relation. We can now give a type for commute on Prim patches:

The concrete implementation of commutePrim is important to Darcs but is not particularly relevant to this discussion and is omitted here. In the actual implementation a type class is used so that commute is polymorphic over the various patch types. Notice that commutePrim has a Maybe return type. This is because patch commutation is not a total relation. Again, these phantom types represent not a single context, but an entire equivalence class, as described in Section 3.2.2.

4.8 Patch Sequences

Patches have an associated state transformation and we need to apply patches in a way that their contexts are respected. When a patch is recorded we know that it will apply in the current context of the repository. If we also store patches in the order they are recorded, then we know they can also be applied in that order. It would be useful if we had a way to store patches such that their application domains are ensured to be in the correct order.

In Section 4.6.2, we introduce a data type, FL, for forward lists. This data type is suitable for storing chains of functions in application order. Here we use forward lists for storing sequences of patches. Instead of storing functions by domain and range, we store patches by pre- and post-context.

Storing patches in context order allows us to bundle up sequences of patches and concern ourselves with just the pre- and post-context of the entire sequence. When extracting elements from the sequence, the context types are lost and we only retain the relationship between context types.

When extracting patches from an FL sometimes we do know which context a patch should have but our use of existentially quantified types means the type system is pessimistic about context equivalence. To work around this we use patch equality functions described in Section 4.10.

By combining commutePrim from the previous section with forward lists we can commute a patch with a sequence of patches. We give this operation the name commuteFL:

commuteFL :: (Prim :> FL Prim) x y -> Maybe ((FL Prim :> Prim) x y)

commuteFL (a :> b :>: bs) = do b' :> a' <- commutePrim (a :> b)

bs' :> a'' <- commuteFL (a' :> bs)

Just (b' :>: bs' :> a'')

commuteFL (a :> NilFL) = Just (NilFL :> a)The monad instance of Maybe handles the cases where commutePrim fails and returns Nothing. The type checker makes it very difficult now to give an incorrect definition of commuteFL.

There are very few incorrect definitions we could give above that would type check. For example, we cannot simply return the input because the type says that the order of the forward list and the patch must be switched in the return value. If we try to return a different list than b' :>: bs', such as NilFL or b' :>: NilFL, then we will find that the type checker complains.

We could rewrite commuteFL so that it returns a :>: xs :> x, where x is the last element of bs and xs :>: x is the same sequence as b :>: bs. Two other possibilities include returning undefined or Nothing. Inspecting for one these mistakes is much easier than manually checking that all the steps above respect patch context.

We have been able to implement a full library of sequence manipulations for both forward and reverse lists. Many of the definitions, such as the ones named in Appendix C work on any forward list. Others, such as commuteFL, work only for sequences of patches.

4.9 Patch Merge

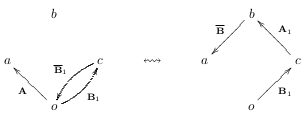

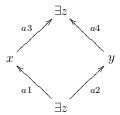

Property 3.5.1 tells us that when we have two patches which commute and share the same pre-context that we can merge the patches. Whenever patches, or sequences of patches, share a pre-context we say they are parallel. Similarly, when patches, or sequences of patches, share a post-context we say they are anti-parallel. The following types correspond to parallel and anti-parallel pairs:

data (a1 :\/: a2) x y = forall z. (a1 z x) :\/: (a2 z y)

data (a3 :/\: a4) x y = forall z. (a3 x z) :/\: (a4 y z)Notice how these definitions correspond to our previous visualization of the symmetry of merge, except that here we are using existential quantification for the pre- and post-contexts of the sequences:

The input to our merge function is a parallel pair, for example for the Prim type this would be:

The implementation of merge, at least for pairs of patches, follows the symmetry of merge example in Section 3.5. Our merge implementation returns the results in an anti-parallel pair because it returns symmetric results. That is, instead of returning just the merged sequence, two patches are returned so that two different sequences, both having the same preand post-contexts, can be constructed from the result.

The two sequences that can be built are documented within and constrained by the type signature of merge. For example, suppose we have the patches p1 and p2 and we use merge to get the patches p1’ and p2’:

Using \(a\) for the existentially quantified type in the pair p1 :\/: p2 and \(b\) for the existentially quantified type in the pair p2' :/\: p1', the types would be as follows:

The only context preserving sequences we could create with an FL are these two:

Any other forward list sequences we try to construct from the above four patches would result in type errors!

4.10 Patch Equality

In Section 4.2 we introduced our type witness for type equality functions. Here we use that type to implement parallel and anti-parallel patch equality tests. To implement patch equality we must also introduce an unsafe operation, the problems with this are discussed in Section 5.2. We use the Eq2 type class for patch comparison which defines the following functions:

We refer to (=\/=) as parallel equality and (=/\=) as anti-parallel equality. These equality checks for patches are based on Property 3.4.1. Note that we do require a run-time check to implement both of the above equality functions.

4.11 Summary

Using a combination of phantom, witness and existential types we are able to describe many of the Patch Theory properties in Haskell types. The nature of Haskell’s type system means that these properties are checked for us at compile time.

We have not encoded all of the Darcs semantics and there are several key things which we do not express. For example, a more accurate encoding of context equivalence classes would directly use sequences of patches instead of existentially quantified types. We have chosen not to model the context types that way at this time. Partially due to the extra programmer effort, but also because of the extra run-time overhead.

In the next chapter we will discuss these points in more detail as well as the ramifications of applying these ideas to Darcs.

Chapter 5 Discussion

We have outlined the core of the techniques we applied to the Darcs source code. Now we will discuss the implications of working with an existing code base and the direct benefits. Many of the pit-falls, setbacks and other hurdles we encountered are covered in Section 5.1. In Section 5.2 we give examples of how this work has improved the Darcs source code.

5.1 Difficulties

The approach we have taken is not without difficulties and trade-offs. In this section we outline the major problems we encountered.

5.1.1 Intentional Context Coercion

Although not defined in the Haskell 98 report, many Haskell implementations provide a function for arbitrarily changing the type of an expression. This function is commonly given the following name and type signature: unsafeCoerce :: a -> b

This function, unsafeCoerce intentionally circumvents type safety to give the programmer ultimate control over the types in the program. This ability to circumvent type safety puts the burden of type soundness on the programmer, which is occasionally useful. We apply a restriction to the generality of unsafeCoerce so that it can only affect part of the type of a value. We define the following patch coercion function:

There are times when we need to coerce, or change, context explicitly. One reason for this is that our contexts depend on run-time values, but we have other uses for unsafeCoerceP which arise from a purely pragmatic standpoint.

The following two sections, give illustrations of when we use context coercion.

Context Equivalence

The development process for Darcs requires that any use of a function having a name that begins with “unsafe” be carefully scrutinized. In practice, the use of unsafeCoerceP is not common and the scrutiny happens on the public Darcs mailing list when source changes are submitted by contributors. One goal of Darcs development is to compartmentalize all uses of unsafe functions to a core set of modules that provide safe interfaces.

As an example of compartmentalizing unsafe functions, we favor the use of the type class function =\/= over the use of unsafeCoerceP. Although =\/= is defined in Section 4.10, we give the definition here as well for convenience:

We give an example instance based on a trivial patch type P:

The instance of Eq2 the Prim type is slightly more involved but in essence the instance simply compares the patches for structural equality while relying on the type signature to constrain when the equality check is allowed. We will use this Eq2 instance for P in the next section as well.

The patch equality checks given here use type witnesses to carry information gained by doing the equality check. The techniques typically used in the literature require that the set of types which can be cast be known fully by the programmer to avoid the use of unsafeCoerce. Instead of using unsafeCoerce, a function for dynamic casting is provided between the types. This typically requires creating a type class instance for the types.

This technique can be found in the Haskell library, Typeable [BS02]. Note that, even Typeable can be used to derive unsound functions with type a -> b by creating “malicious” type class instances [Kis09].

Interfacing with Older Modules

In the Darcs implementation, the Repository.Old module is used for reading old-fashioned repositories. On the other hand, the newer Repository.Hashed module uses hashes for improved robustness and atomicity of operations. The Hashed module is written internally with our witness type style whereas the Old module only supports the witness types superficially in most places. Consider the readRepo function which reads from a repository and returns the set of patches stored in the repository:

readRepo :: (IsRepoType rt, RepoPatch p, ApplyState p ~ Tree)

=> Repository rt p wR wU wT

-> IO (PatchSet rt p Origin wR)

readRepo r

| formatHas HashedInventory (repoFormat r) = readRepoHashed r (repoLocation r)

| otherwise = do Sealed ps <- Old.readOldRepo (repoLocation r)

return $ unsafeCoerceP psWhen reading the sequences of patches from the repository, we know that the returned sequence of patches have a final context that matches the recorded context of the repository. The type signature of readRepo expresses this through the type r. The function readOldRepo in the otherwise branch of the function does not have the right type to express this relationship whereas Hashed.readHashedRepo does. The reason for this lack of expressiveness is for entirely pragmatic reasons. To avoid rewriting the Old interface we carefully use unsafeCoerceP so that the sequence of patches returned by readOldRepo will unify with the sequence of patches returned by readHashedRepo.

Examples such as readRepo are not common, and can be avoided in theory, but in practice unsafeCoerceP can be used to save significant effort when the pay off for that effort is small.

5.1.2 Unsound Equality Examples

While unsafeCoerceP has legitimate uses, we must be quite cautious about one particular usage. If we are not careful we can combine =\/= with certain other functions, and completely circumvent the safety of Haskell’s type system. While this is very undesirable, we can learn to avoid this by understanding the examples in this section.

If we combine =\/= with a function that returns a type involving phantoms types, and those phantom types are unconstrained with respect to the types of input parameters, then we can recreate unsafeCoerce :: a -> b.

The following examples demonstrate the problem of recreating an unsafe operation. Note, we use an additional feature of GHC for these examples known as lexically scoped type variables. The scope of type variables is introduced by the use of an explicit forall in the type signature. (While it could be argued that we should disallow lexically scope type variables to avoid these unsound definitions, the approaches described in this document are significantly easier to express when using lexically scoped type variables. Additionally, it may be possible in some or all cases where lexically scope type variables are used to instead employ clever usage of standard Haskell expressions and functions, such as asTypeOf.)

The first example uses a data constructor P that allows us to assign arbitrary types to its phantom types. We are using this as a place holder for real patch types. We assume here that =\/= is defined for the type P a b such that it always returns IsEq, such as the definition in the previous section. We use this to derive an alternative definition of unsafeCoerce as follows:

unsafeCoerce :: forall a b. a -> b

unsafeCoerce x = case a =\/= b of

IsEq -> x

_ -> error "a = b, making this impossible"

where (a, b) = (P, P) :: (P () a, P () b)Below is another example that demonstrates that any function which returns phantoms that are unconstrained by the input types can be used to reconstruct unsafeCoerce. We use zipWithFL from Appendix C.3 but here we use the type Maybe to work around the type checking difficulties. We could have also avoided the use of Maybe and used unsafeCoerceP but this example demonstrates that unsound code can be written without needing direct access to unsafeCoerceP:

zipWithFL :: (forall r s u v x y. a r s → b u v → c x y)

→ FL a q z → FL b j k → Maybe (FL c m n)

zipWithFL f (a :>: as) (b :>: bs) =

case zipWithFL f as bs of

Nothing → Just (f a b :>: NilFL)

Just cs → Just (f a b :>: cs)

zipWithFL _ _ _ = NothingThe above code will type check, but notice that the returned type, Maybe (FL c m n), has phantoms m and n that are unrelated to the input types. This allows the type checker to unify m and n with any other types. Consider this example of unsafeCoerce:

unsafeCoerce :: forall a b. a → b

unsafeCoerce x = case a =\/= b of

IsEq → x

_ → error "a = b, making this impossible"

where a :: FL P () a

Just a = zipWithFL f (P:>:NilFL) (P:>:NilFL)

b :: FL P () b

Just b = zipWithFL f (P:>:NilFL) (P:>:NilFL)

f _ _ = P -- The way P is constructed does not matter here.

-- f only needs to satisfy the type signature of

-- zipWithFL.While the examples here may seem contrived, similar examples have occurred naturally during development making this a very real issue we must consider. We give both examples to illustrate that not only can constructors with phantom types be composed with =\/= in unsound ways, but so can any function which returns a value that has unconstrained phantom types. In this regard, we consider such functions unsafe and avoid them when possible.

We now investigate one exception to this rule. When a function returns an unconstrained phantom type as part of a Sealed type our program remains sound. This is because a type that has been hidden within the Sealed type cannot be passed up or returned to a higher level than it was existentially bound at. For example, if we returned the type Sealed (FL c m) from zipWithFL, then we cannot use lexically scoped type variables to get at the type of n and the above definitions will not work.

Consider this definition of zipWithFL that uses Sealed:

zipWithFL :: (forall r s u v x y. a r s → b u v → c x y)

→ FL a q z → FL b j k → Sealed (FL c m)

zipWithFL f (a :>: as) (b :>: bs) =

case zipWithFL f as bs of

Sealed cs → Sealed (f a b :>: cs)

zipWithFL _ _ _ = Sealed NilFLWe could try to combine the result of this zipWithFL with =\/= but we no longer have access to the type that was previously named n and so the examples using lexically scoped type variables to control how it unifies will no longer apply.